Agent Protocol Stack: MCP, A2A, and AG-UI

About 5 min

Agent Protocol Stack: MCP, A2A, and AG-UI

- Introduction to the Agent Protocol Stack: MCP, A2A, and AG-UI

- Architecture and Core Components of the A2A Protocol

- Typical Interaction Flow in the A2A Protocol

- Architecture, Workflow, and Core Technical Features of AG-UI

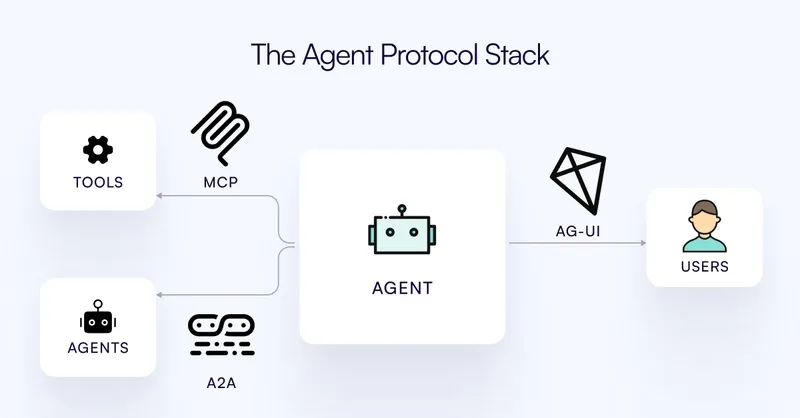

Introduction to the Agent Protocol Stack

MCP (Model Context Protocol)

- Responsibility: Responsible for communication between the Agent and external tools/data.

- Release Date: Launched by Anthropic in November 2024.

- Function: Provides a standardized method for AI models to interact with external resources (tools, data sources, and systems), simplifying the integration process and allowing AI Agents to access necessary resources without custom connectors.

A2A (Agent-to-Agent Protocol)

- Responsibility: Responsible for communication between Agents.

- Release Date: Launched by Google in April 2025.

- Function: Dedicated to enabling AI Agents to discover, communicate, and collaborate with each other. It standardizes how Agents share tasks, negotiate capabilities, and coordinate actions in different environments.

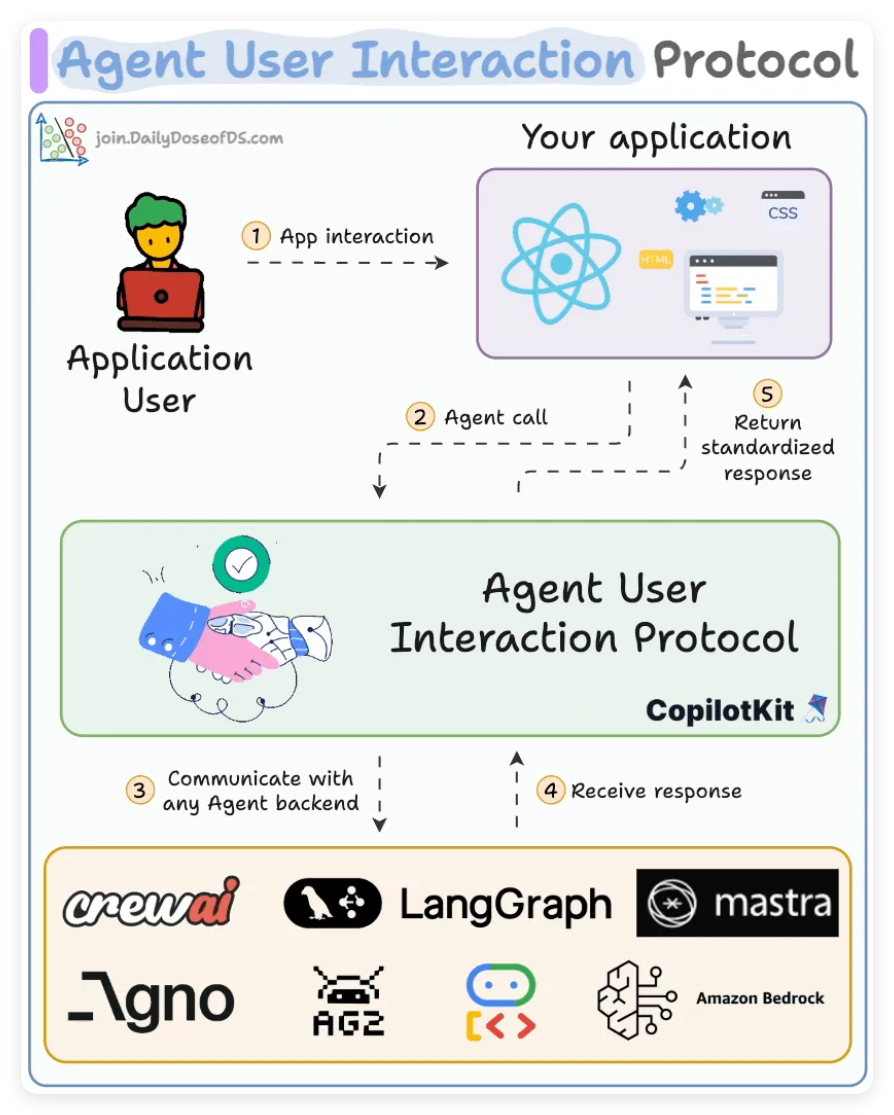

AG-UI (Agent-User Interaction Protocol)

- Responsibility: Responsible for communication between the Agent and the user interface.

- Release Date: Launched by CopilotKit in May 2025.

- Function: Positioned as a real-time interaction standard for AI Agents and front-end applications, enabling Agents to interact with the interface in real-time and bidirectionally, and efficiently presenting Agent-generated content to users.

Note: These three protocols are not in competition but constitute a complementary ecosystem.

Advantages of Combining the Three Protocols

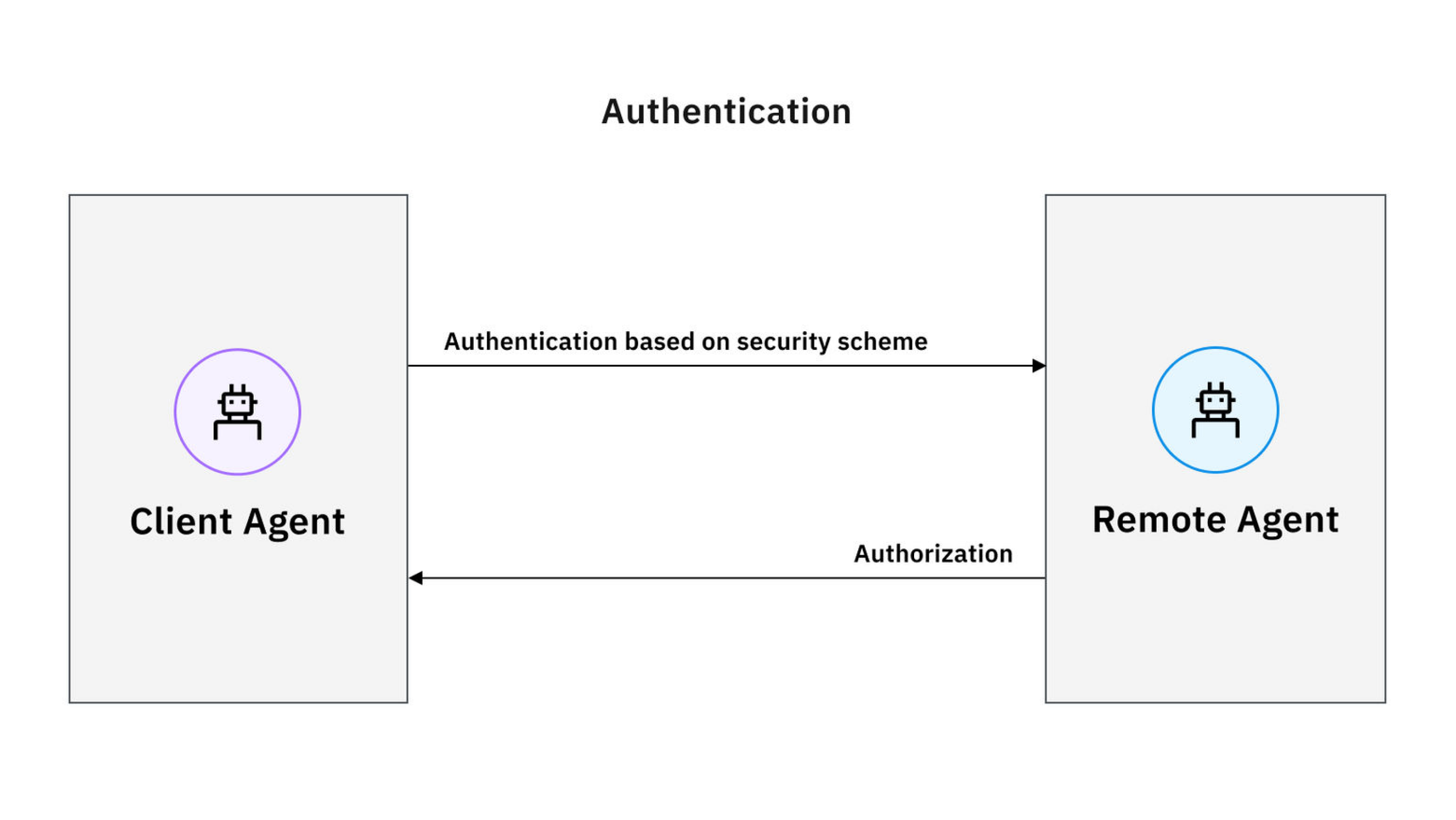

- Security: Each protocol includes security measures to ensure secure and authorized interactions between Agents and with external systems.

- Modularity: Various user interfaces, Agents, and tools can be developed independently, focusing on specific tasks, and then integrated together using protocols.

- Scalability: Thanks to the use of standardized protocols, new capabilities can be added without completely overhauling the existing infrastructure.

- Flexibility: Can be adapted to any framework and tool that follows the protocol.

A2A Protocol

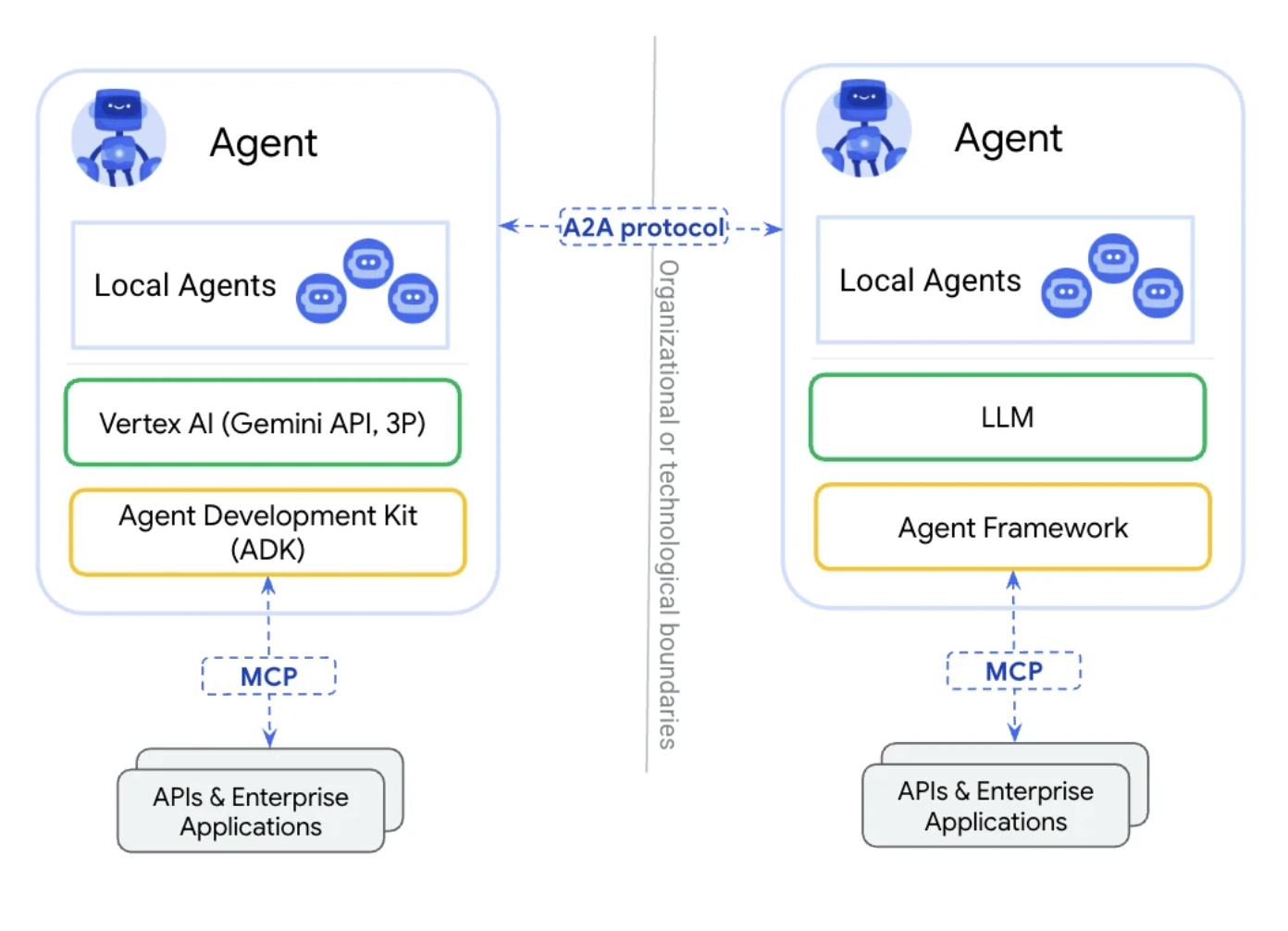

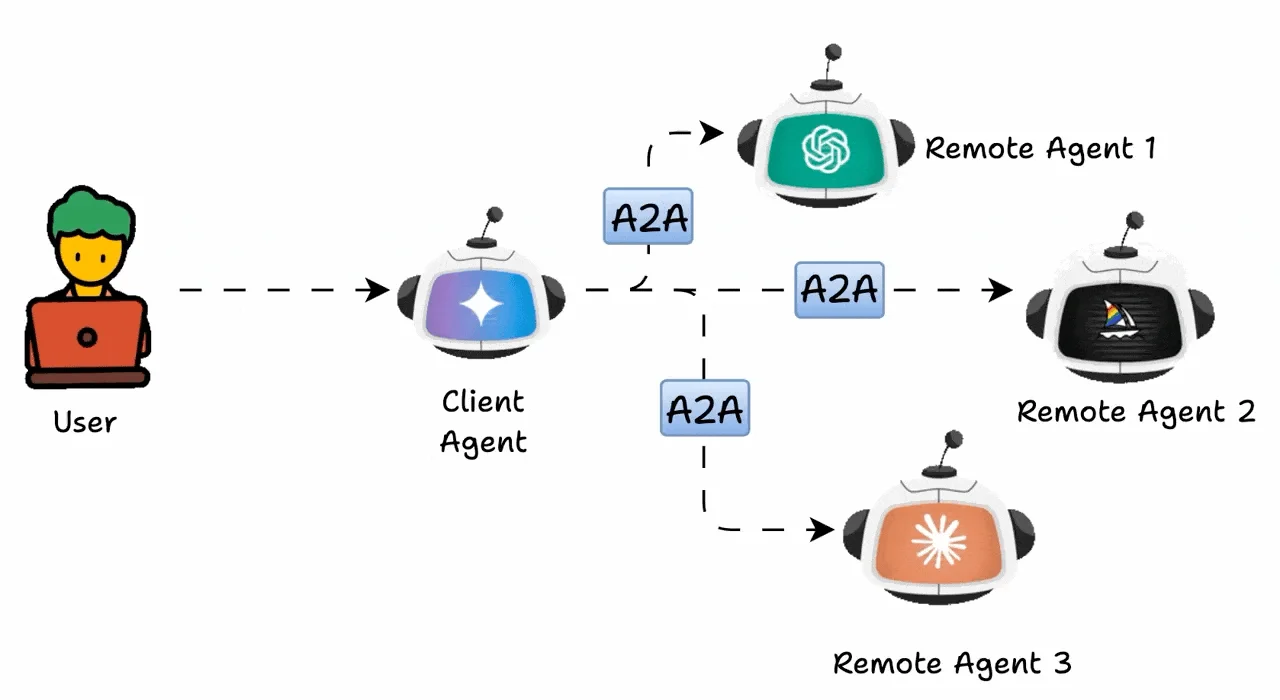

A2A is a protocol used for communication between remote Agents (e.g., Agents from different companies or Agents from different departments within the same company, etc.)

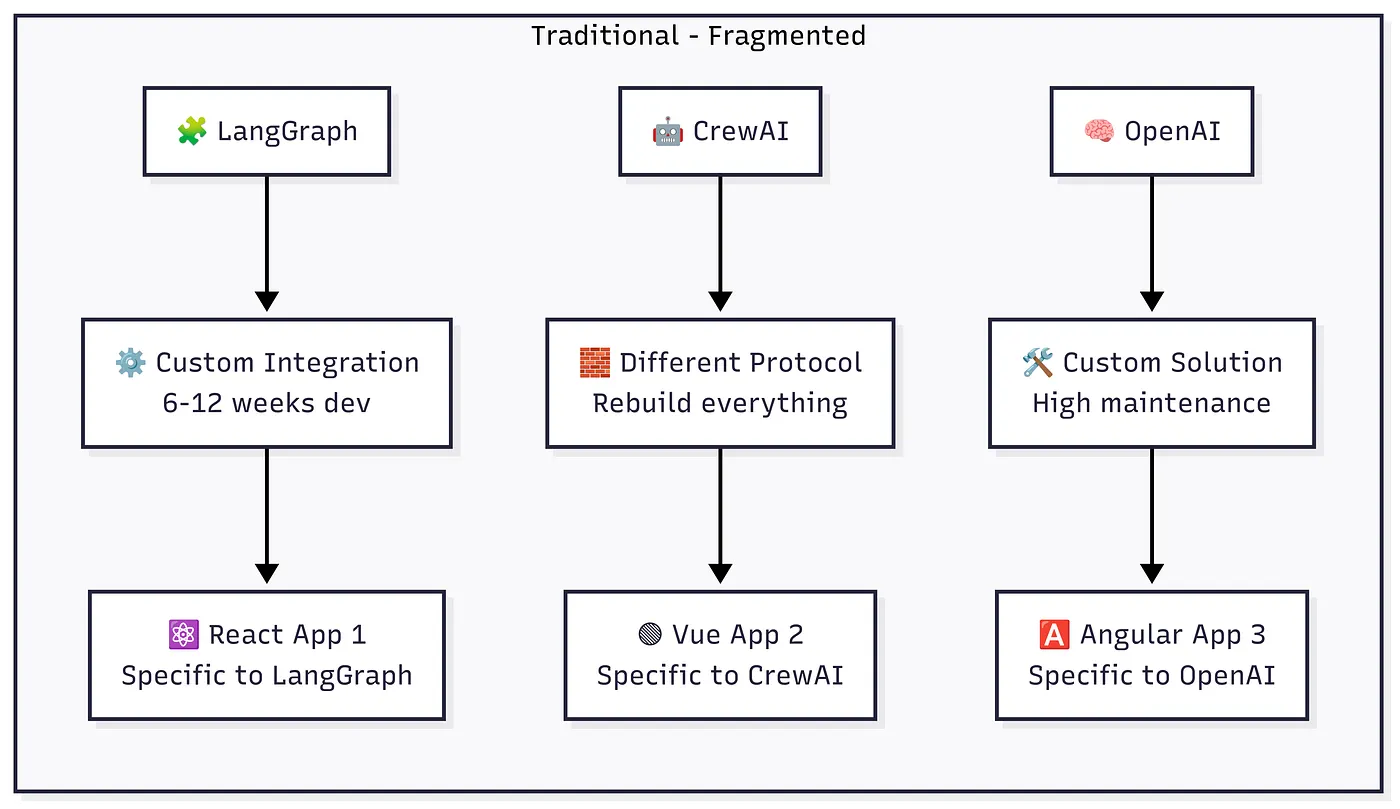

Challenges of Agent Communication

- Redundant Work: Each time a new AI Agent is added, it is necessary to figure out how it communicates with other parts of the ecosystem, which can lead to redundant or "copy-paste" integration code.

- Compatibility Issues: Suppose your natural language processing model runs on Python, while your knowledge graph is hosted in a Java-based microservice. They may transmit data differently, making it difficult for them to work together.

- Scalability Bottleneck: As more specialized Agents (e.g., image recognition, prediction, robotics) are introduced, complexity grows exponentially. Non-standardized communication can become a mess, undermining your ability to innovate quickly.

- Coupling: Traditional Agent integration methods require coding custom interfaces for each service, resulting in coupling. Once the logic changes, it often breaks existing code.

Vision of the A2A Protocol

- A2A = Agent-to-Agent: A shared set of rules (e.g., API specifications and underlying data models) ensures that each Agent "communicates" in a consistent manner.

- Low Coupling, Plug-and-Play: If a newly deployed Agent follows the same A2A guidelines, it can immediately exchange messages with the rest of the system.

- Unified Monitoring and Security: Standard protocols typically integrate security features (authentication, authorization) and logging, so you can control who does what, when, and where.

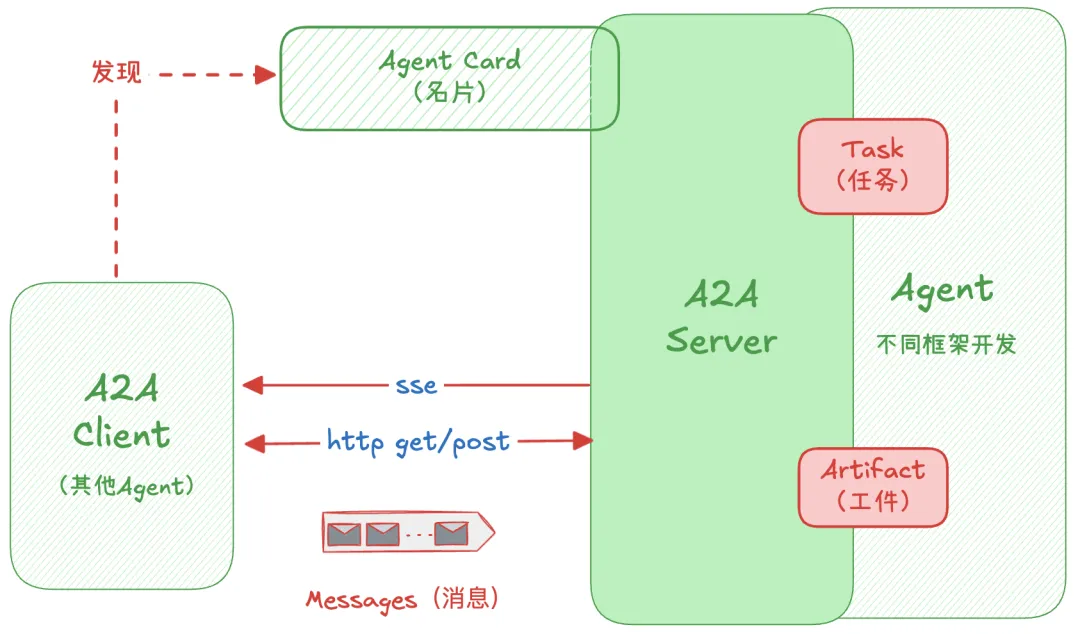

Architecture and Core Components of the A2A Protocol

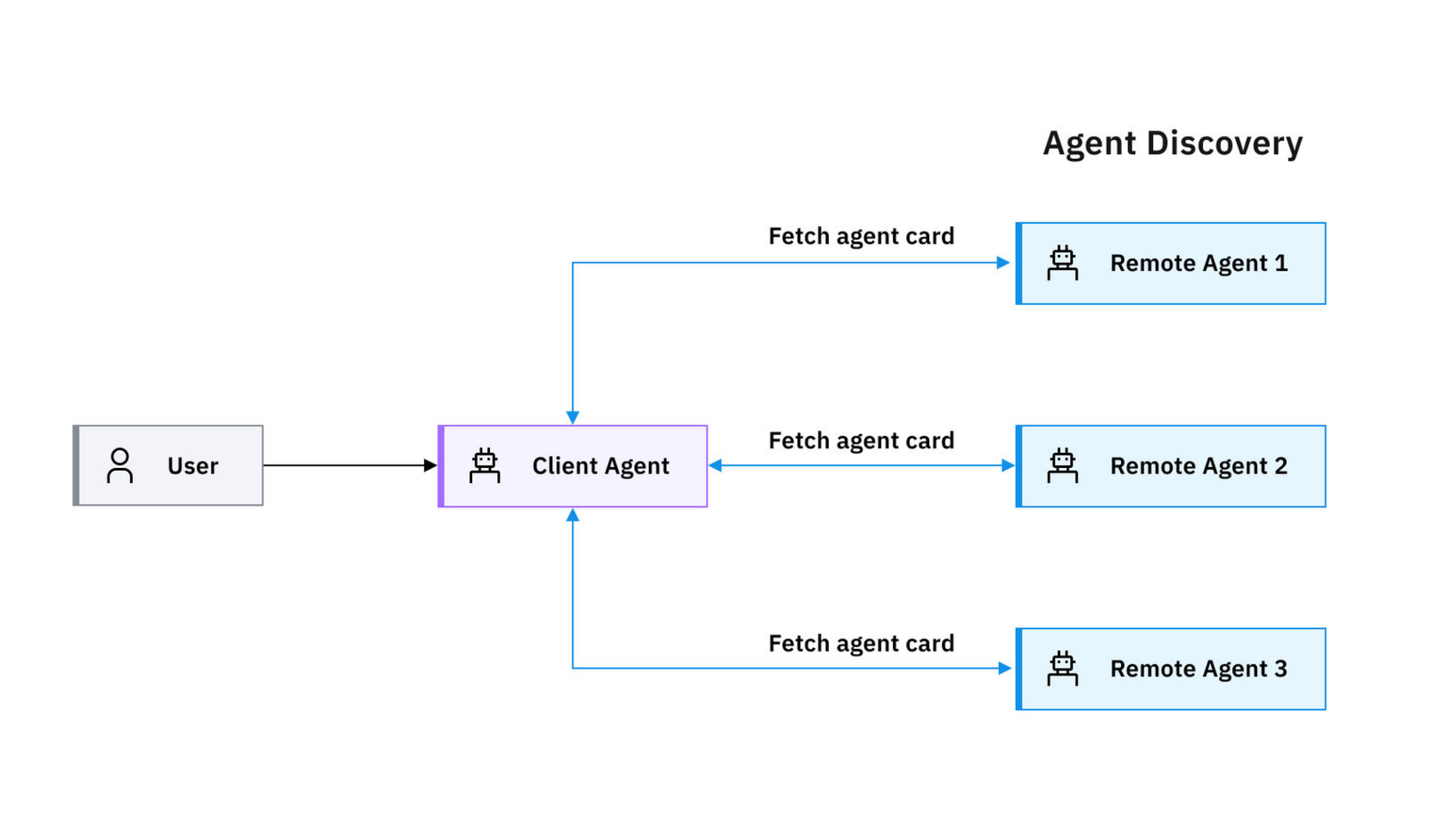

- Agent Card: A self-description document of an Agent, including basic information, API endpoints, supported capabilities, and authentication schemes, which is the basis for Agent discovery and interaction.

- Identity Information (Name, Version, Description)

- Capability List (Supported task types, modalities, such as "text + file")

- Communication Endpoints (URL, supported transport methods: SSE/WebSocket)

- Authentication Requirements (e.g., OAuth 2.0, API Key)

- Task: The core unit of collaboration, representing a work request delegated by one Agent to another, including task description, status, priority, and other information.

- Message: The information carrier exchanged between Agents, which can contain various types of content (e.g., text, images, structured data).

- Artifact: The final output of task execution, which can be documents, datasets, analysis results, etc.

Typical Interaction Flow in the A2A Protocol

- Service Discovery: The client Agent learns about the capabilities and interfaces of the remote Agent by obtaining the Agent Card of the remote Agent.

- Authentication: Ensure that only authorized Agents can communicate with each other.

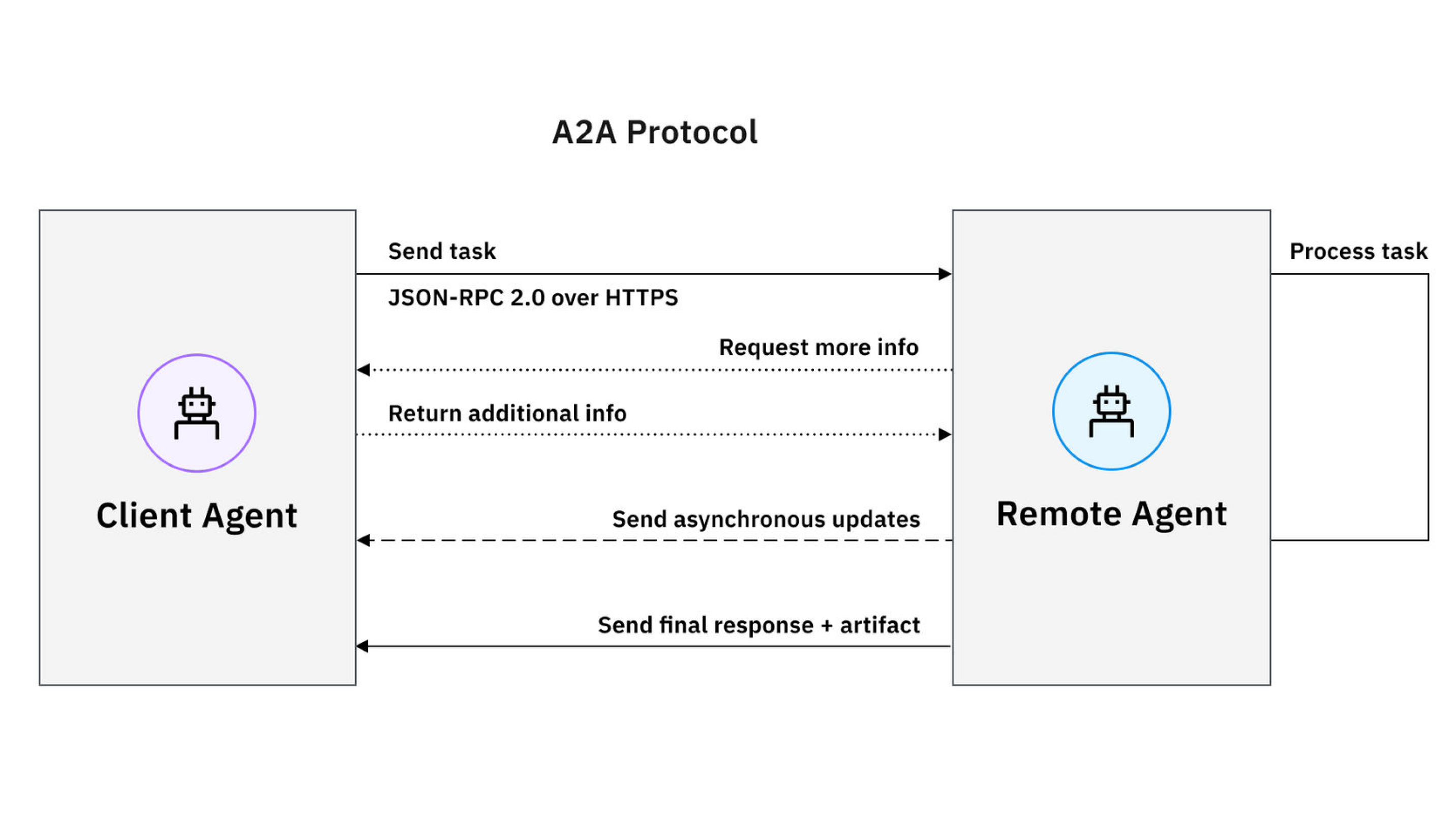

- Communication

- Task Creation: The client Agent sends a task request to the remote Agent, including the task description and necessary parameters.

- Task Execution: The remote Agent receives the task and starts processing it, which may involve multiple rounds of message exchange.

- Status Update: The remote Agent reports task progress to the client Agent periodically or at key nodes.

- Result Delivery: After the task is completed, the remote Agent returns the artifact to the client Agent.

AG-UI Protocol

Why Agentic Applications Need AG-UI

- Agent applications break the simple request/response model that dominated front-end and back-end development before the Agent era: the client makes a request, the server returns data, the client renders data, and the interaction ends.

- Characteristics of Agentic Applications:

- Agent programs run for a long time and continuously perform intermediate work—often requiring multiple rounds of sessions.

- Agents are non-deterministic and can control the application user interface in non-deterministic ways.

- Agents simultaneously mix structured and unstructured I/O (such as text and voice, as well as tool calls and status updates).

- Agents need user interaction combinations: for example, they can call sub-Agents, often recursively.

- AG-UI is an event-based protocol that supports dynamic communication between the front-end and back-end of Agent systems. It is built on the basic protocols of the Web (HTTP, WebSocket) and serves as an abstraction layer designed specifically for the Agent era, bridging the gap between traditional client-server architectures and the dynamic, stateful characteristics of AI Agents.

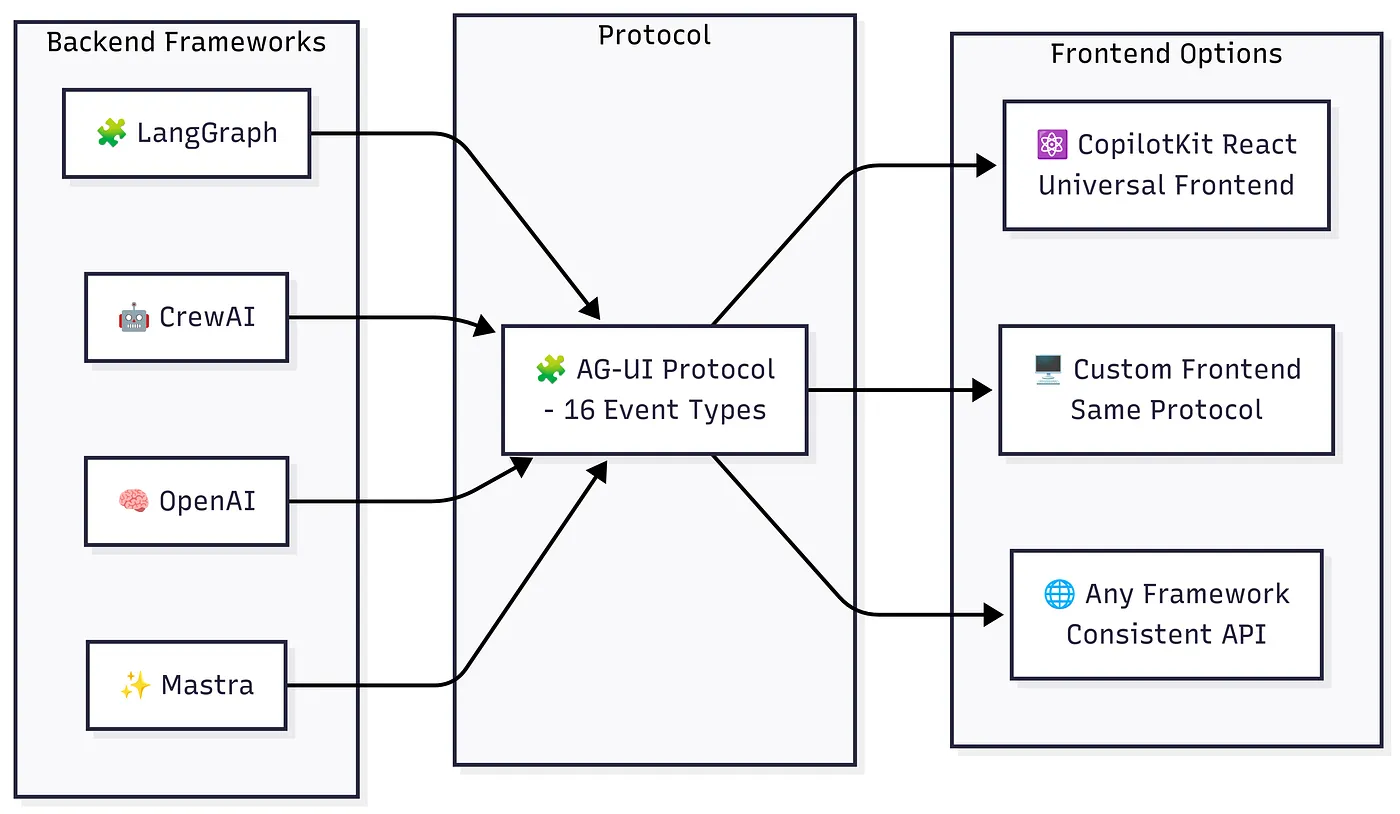

AG-UI is a Universal Adapter for Agentic UI

AG-UI can be regarded as a universal adapter that allows any Agent backend to communicate with any front end.

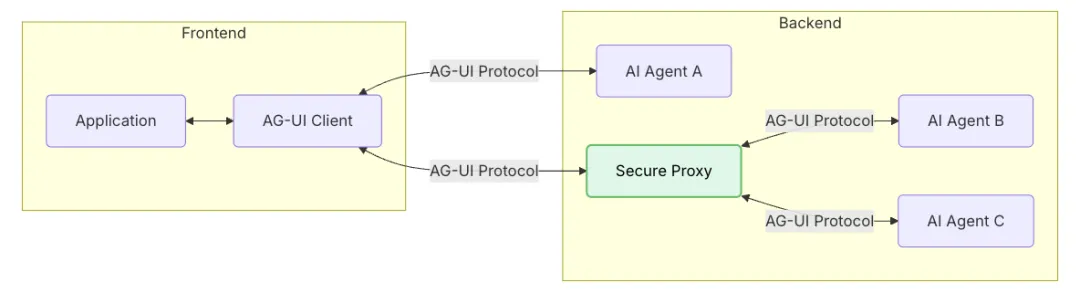

AG-UI Architecture

AG-UI adopts a client-server architecture, which standardizes the communication between Agents and applications.

- Application: User-facing application

- AG-UI Client: Universal communication client (such as HttpAgent or a dedicated client for connecting existing protocols)

- Agent: Backend AI Agent for handling user requests and generating streaming responses

- Secure Proxy: Provides additional functions and acts as a secure Agent's backend service

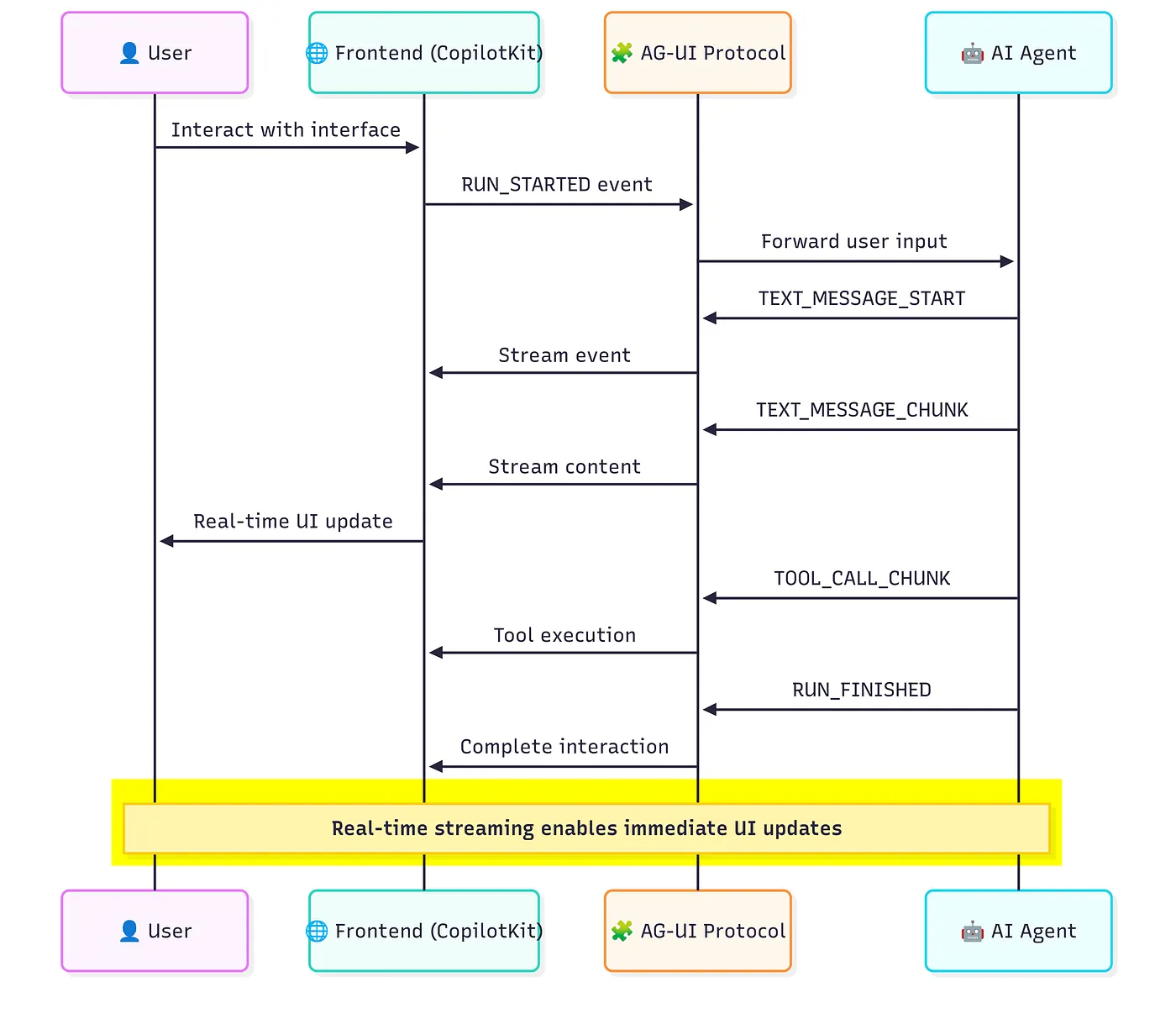

AG-UI Workflow

Core Technical Features of AG-UI

- Event-Driven Real-Time Interaction: AG-UI defines a standardized event model that supports continuous event-stream communication between the front-end and back-end. Every action of the Agent (sending messages, calling tools, updating status, etc.) is pushed to the front-end in the form of events; user operations (inputting messages, clicking buttons, etc.) are also sent to the back-end as events. The front-end can obtain the progress of AI in real-time by subscribing to the event stream, without frequent polling; the back-end can respond to user input immediately by listening to events.

- Two-Way Collaboration: AG-UI supports true two-way collaboration. Agents can continuously output content to users and adjust their behavior based on user feedback (Human-in-the-Loop); the front-end can also render the UI in real-time based on the Agent's status (such as displaying processing progress, tool call results, etc.), or provide real-time feedback of actions on the UI to the Agent. This makes AI more like an interactive assistant rather than a passively answering machine.

- Human-in-the-loop collaboration: Allows users to intervene in the artificial intelligence decision-making process and is suitable for complex workflows that require manual confirmation or guidance.

- Standardized Events: Defines 16 event types (such as TEXT_MESSAGE_CONTENT, TOOL_CALL_START), simplifying the development process.

- Streaming

- Framework Independent

- Implement Tool Progress Updates

- Shared Mutable State

- Quickly Build User Interfaces, Plug and Play

Reference

- https://github.com/copilotkit/copilotkit

- https://docs.ag-ui.com/introduction

- https://google-a2a.wiki/technical-documentation/

- https://www.ibm.com/cn-zh/think/topics/agent2agent-protocol